由于整个 Hadoop 源代码过于臃肿,此处搭建 HDFS 源码分析环境,分析 HDFS 源码,为后续分析 MapReduce 和 Yarn 等作基础。

1.下载 hadoop-2.7.3.tar.gz 和 hadoop-2.7.3-src.tar.gz

官网下载地址:http://apache.fayea.com/hadoop/common/hadoop-2.7.3/

分别解压两个压缩包:

hadoop-2.7.3.tar.gz 用于搭建本地伪分布式环境;

hadoop-2.7.3-src.tar.gz 用于搭建 HDFS 源码环境。

2.搭建 HDFS 源码环境

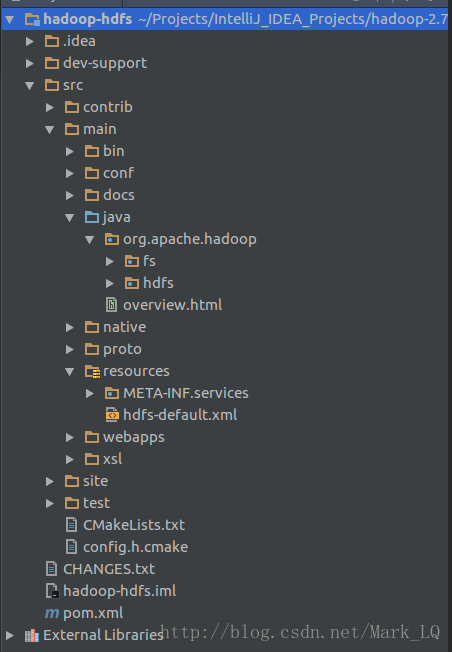

1]、从解压的目录下找到 hadoop-2.7.3-src -> hadoop-hdfs-project -> hadoop-hdfs ,复制 hadoop-hdfs(是一个包含pom.xml文件的工程目录) 到一定目录,打开 IDEA,使用 maven 工程打开,此时的目录结构如下:

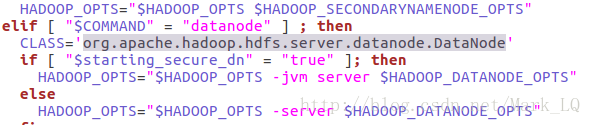

2]、打开 hadoop-2.7.3 下的 bin 目录下的 hdfs,找到启动 DataNode 对应的类为org.apache.hadoop.hdfs.server.datanode.DataNode:

3]、IDEA 中打开 DataNode,运行该类,编译会报如下错误:

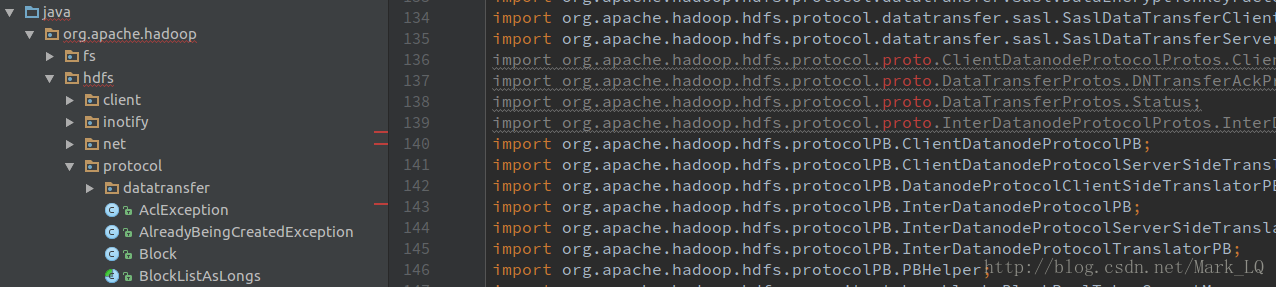

org.apache.hadoop.hdfs.protocol.proto包找不到。

搜索的结果是,protobuf 是一种数据交换格式,Hadoop 使用 protobuf 用于分布式应用之间的数据通信或者异构环境下的数据交换。该包是通过 protobuf 动态生成的。

4]、安装 protobuf。

(1)下载 protobuf 源码:https://github.com/google/protobuf

(2)下载必须的工具包:sudo apt-get install autoconf automake libtool curl make g++ unzip

(3)依次执行如下命令:

./autogen.sh

./configure

make

make check

sudo make install

sudo ldconfig # important! refresh shared library cache.

运行 protoc --version ,正确输出,则安装成功

(4)安装 Java 的 protobuf 运行环境:

cd java

mvn install # Install the library into your Maven repository

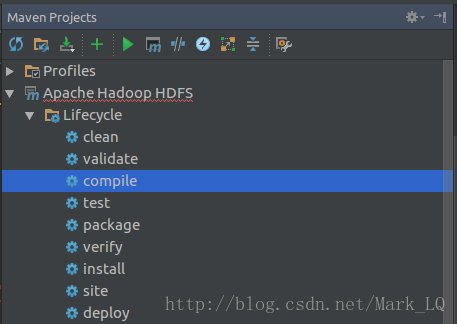

5].IDEA 中执行 mvn compile:

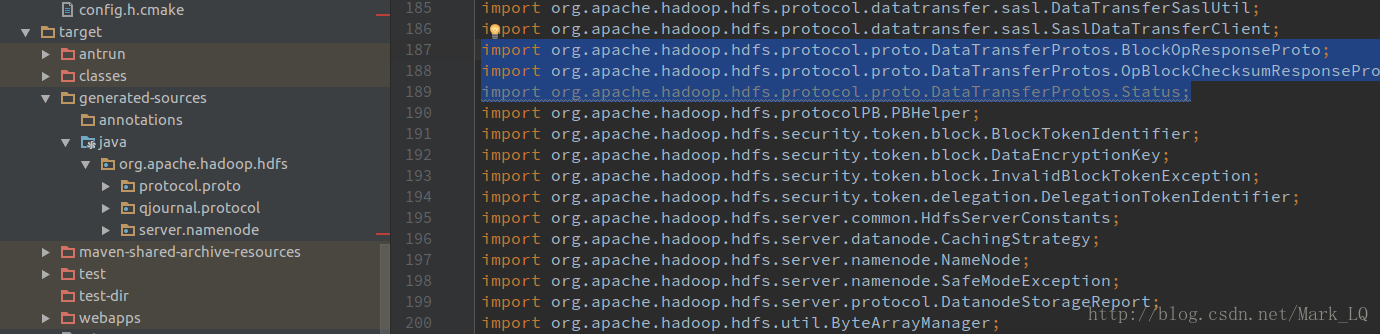

生成的代码如下,报错信息消失了:

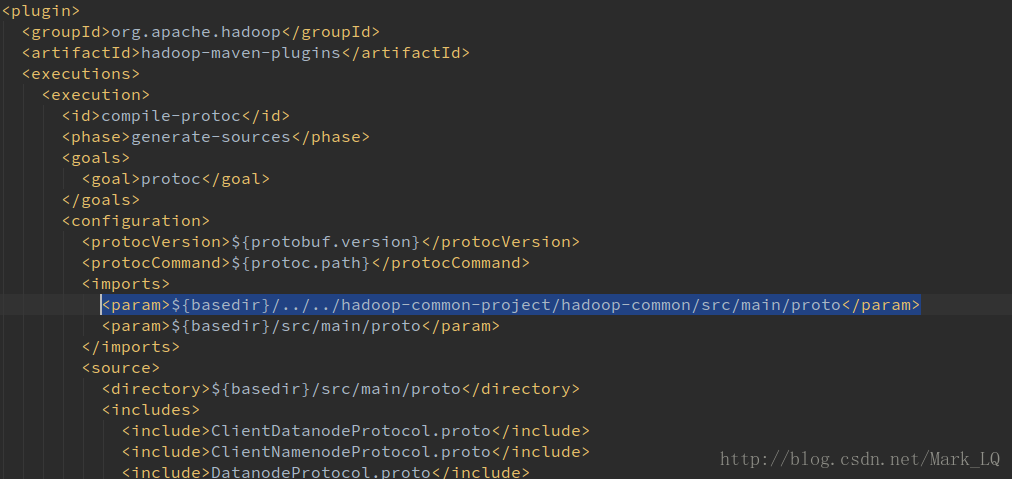

如果生成代码时报错,可查看 pom.xml 文件中 protoc 插件需要传递 hadoop-common 工程的相关参数,所以需要指定正确路径:

6].再次运行 DataNode,

(1)报如下错误:

java.lang.ClassNotFoundException: org.apache.hadoop.tracing.TraceAdminProtocol

修改 hadoop-common 依赖的 scope 范围

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-auth</artifactId>

<!--<scope>provided</scope>-->

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<!--<scope>provided</scope>-->

</dependency>

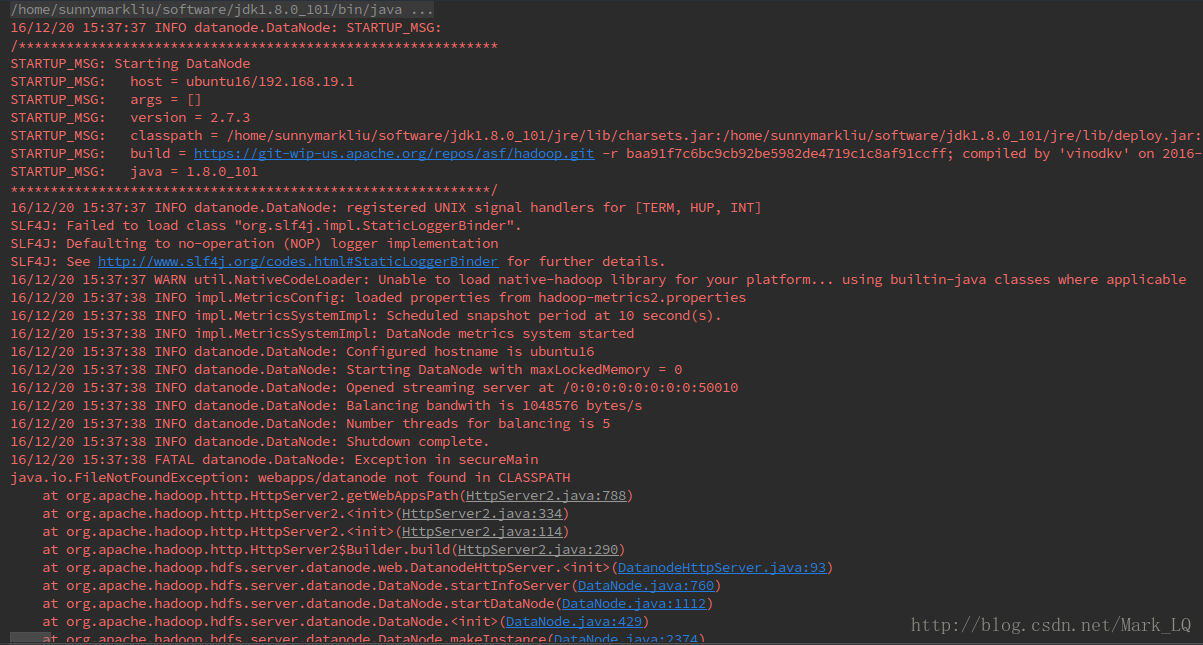

(2)将 hadoop-2.7.3 下的 etc/hadoop 中的配置文件复制到工程的 resources 目录下,运行 DataNode,报如下错误webapps/datanode not found in CLASSPATH:

最简单的方法是将 main/webapps 目录复制到 resources 目录下(最好不要这样)或添加 webapps 到 CLASSPATH中,启动DataNode:

此时 DataNode 启动成功,并尝试链接 NameNode,由于此时并没有搭建 NameNode,所以会Retrying connect to server: ubuntu16/192.168.19.1:9000

3.搭建 Hadoop 伪分布式环境,提供NameNode

(1)具体搭建步骤参考 Ubuntu16.04环境搭建 Hadoop 2.7.0 全分布式环境(http://www.linuxdiyf.com/linux/27090.html)

将 etc/hadoop 目录下的 slaves 设置为空。

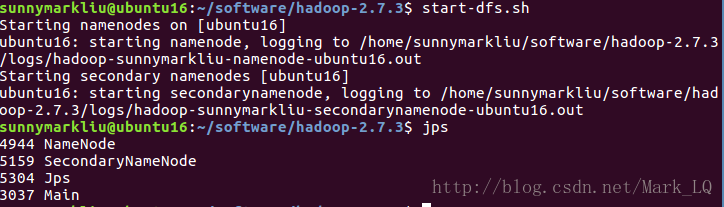

(2)命令行启动 hadoop-2.7.3 下的 NameNode:

(3)启动 IDEA 的 DataNode:

2016-12-20 15:52:22,213 INFO datanode.DataNode (LogAdapter.java:info(45)) - STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting DataNode

STARTUP_MSG: host = ubuntu16/192.168.19.1

STARTUP_MSG: args = []

STARTUP_MSG: version = 2.7.3

STARTUP_MSG: classpath = /home/sunnymarkliu/software/jdk1.8.0_101/jre/lib/charsets.jar:...

STARTUP_MSG: build = https://git-wip-us.apache.org/repos/asf/hadoop.git -r baa91f7c6bc9cb92be5982de4719c1c8af91ccff; compiled by 'vinodkv' on 2016-08-18T01:01Z

STARTUP_MSG: java = 1.8.0_101

************************************************************/

2016-12-20 15:52:22,243 INFO datanode.DataNode (LogAdapter.java:info(45)) - registered UNIX signal handlers for [TERM, HUP, INT]

2016-12-20 15:52:24,216 WARN util.NativeCodeLoader (NativeCodeLoader.java:<clinit>(62)) - Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2016-12-20 15:52:26,681 INFO impl.MetricsConfig (MetricsConfig.java:loadFirst(112)) - loaded properties from hadoop-metrics2.properties

2016-12-20 15:52:27,267 INFO impl.MetricsSystemImpl (MetricsSystemImpl.java:startTimer(375)) - Scheduled snapshot period at 10 second(s).

2016-12-20 15:52:27,267 INFO impl.MetricsSystemImpl (MetricsSystemImpl.java:start(192)) - DataNode metrics system started

2016-12-20 15:52:27,274 INFO datanode.BlockScanner (BlockScanner.java:<init>(172)) - Initialized block scanner with targetBytesPerSec 1048576

2016-12-20 15:52:27,313 INFO datanode.DataNode (DataNode.java:<init>(424)) - Configured hostname is ubuntu16

......

2016-12-20 15:52:32,281 INFO datanode.DataNode (BPServiceActor.java:offerService(626)) - For namenode ubuntu16/192.168.19.1:9000 using DELETEREPORT_INTERVAL of 300000 msec BLOCKREPORT_INTERVAL of 21600000msec CACHEREPORT_INTERVAL of 10000msec Initial delay: 0msec; heartBeatInterval=3000

2016-12-20 15:52:32,637 INFO datanode.DataNode (BPOfferService.java:updateActorStatesFromHeartbeat(511)) - Namenode Block pool BP-684189179-192.168.19.1-1482203980767 (Datanode Uuid 2a495dbf-e87e-410f-ad63-9d36bdc3bd22) service to ubuntu16/192.168.19.1:9000 trying to claim ACTIVE state with txid=12

2016-12-20 15:52:32,637 INFO datanode.DataNode (BPOfferService.java:updateActorStatesFromHeartbeat(523)) - Acknowledging ACTIVE Namenode Block pool BP-684189179-192.168.19.1-1482203980767 (Datanode Uuid 2a495dbf-e87e-410f-ad63-9d36bdc3bd22) service to ubuntu16/192.168.19.1:9000

2016-12-20 15:52:32,737 INFO datanode.DataNode (BPServiceActor.java:blockReport(492)) - Successfully sent block report 0x2746d6afc60, containing 1 storage report(s), of which we sent 1. The reports had 0 total blocks and used 1 RPC(s). This took 20 msec to generate and 79 msecs for RPC and NN processing. Got back one command: FinalizeCommand/5.

2016-12-20 15:52:32,737 INFO datanode.DataNode (BPOfferService.java:processCommandFromActive(694)) - Got finalize command for block pool BP-684189179-192.168.19.1-1482203980767

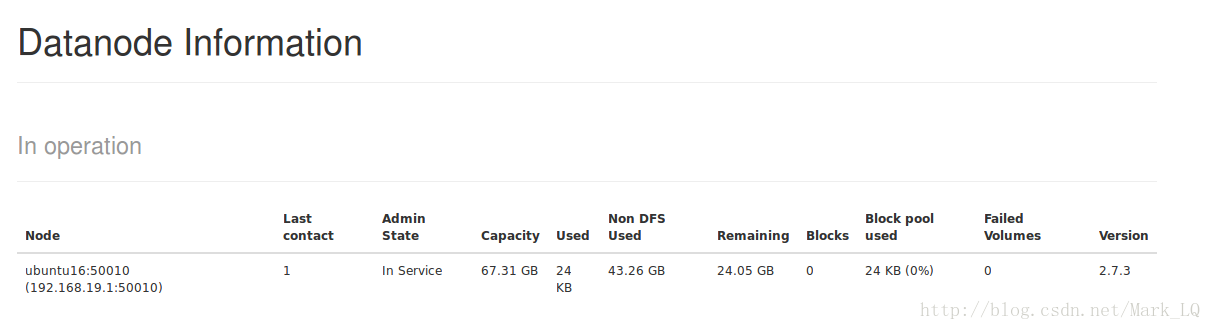

NameNode 和 DataNode 启动成功!