在Workstation12 中创建了3个安装了CentOS7.0的虚拟机,其中一个作为主节点master,两个作为从节点slave,各种准备环境搞好后安装了Hadoop2.6.4,各种配置文件啥的都弄好了,初始化也successful了,但是在主结点上执行启动命令后,却在master:50070和master:8088上看不到datanode,在网上尝试了各种方法都没起作用,最终应该是修改了/etc/hosts文件才搞定。总结下,发现问题最好的方法就是查看日志,根据日志提示找问题。

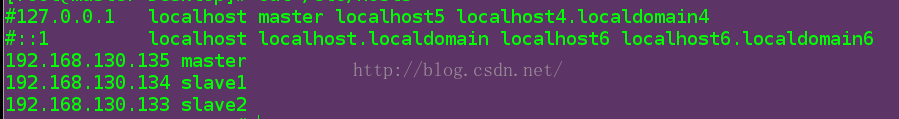

下面是查看slave结点上的datanode日志:发现是无法连接master上的9000端口。然后telnet master 9000发现refused connection,终于找到了问题,网上有说要关闭防火墙,但是CentOS7.0的默认好像没开,不过还是在3个结点上执行了关闭防火墙的命令,都不行,最后把/etc/hosts文件里的前两行注释掉才好了。

slave结点上的datanode日志:

2016-08-02 08:17:17,570 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: master/192.168.130.135:9000. Already tried 0 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10, sleepTime=1000 MILLISECONDS)

2016-08-02 08:17:18,572 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: master/192.168.130.135:9000. Already tried 1 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10, sleepTime=1000 MILLISECONDS)

2016-08-02 08:17:19,576 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: master/192.168.130.135:9000. Already tried 2 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10, sleepTime=1000 MILLISECONDS)

2016-08-02 08:17:20,578 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: master/192.168.130.135:9000. Already tried 3 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10, sleepTime=1000 MILLISECONDS)

2016-08-02 08:17:21,580 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: master/192.168.130.135:9000. Already tried 4 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10, sleepTime=1000 MILLISECONDS)

2016-08-02 08:17:22,584 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: master/192.168.130.135:9000. Already tried 5 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10, sleepTime=1000 MILLISECONDS)

2016-08-02 08:17:23,587 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: master/192.168.130.135:9000. Already tried 6 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10, sleepTime=1000 MILLISECONDS)

2016-08-02 08:17:24,589 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: master/192.168.130.135:9000. Already tried 7 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10, sleepTime=1000 MILLISECONDS)

2016-08-02 08:17:25,594 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: master/192.168.130.135:9000. Already tried 8 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10, sleepTime=1000 MILLISECONDS)

2016-08-02 08:17:26,596 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: master/192.168.130.135:9000. Already tried 9 time(s); retry policy isRetryUpToMaximumCountWithFixedSleep(maxRetries=10, sleepTime=1000 MILLISECONDS)

2016-08-02 08:17:26,598 WARN org.apache.hadoop.hdfs.server.datanode.DataNode: Problem connecting to server: master/192.168.130.135:9000

总结一下几种排查方法:

1、首先排查4个配置文件项(core-site.xml、hdfs-site.xml、mapred-site.xml、yarn-site.xml)有没错误;

2、关闭防火墙,

systemctl stop firewalld.service # 关闭firewall

systemctl disable firewalld.service # 禁止firewall开机启动

3、telnet 主节点的9000端口(core-site.xml配置文件中的配置的端口);

4、tmp、data、name文件权限问题;

5、有的说是三个结点的uuid不一致;

·········

注:最重要的是要重新格式化,在重新格式化前要把 tmp 和 hdfs/name、hdfs/data 下的文件都删除掉,这样也是为避免uuid不一致。